PURPOSE-BUILT HARDWARE FOR EDGE AI

High-performance processing on power-constrained devices

Neural Decision Processors

Our family of Neural Decision Processors (NDPs) are specifically designed to run deep learning models, providing 100x the efficiency and 10/30x the throughput of existing low-power MCUs.

From acoustic event detection for security applications to video processing in teleconferencing, our hardware can equip almost any device with powerful advanced intelligence, enabling real-time data processing and decision making with near-zero latency.

Seamless Integration

Our core architectures process neural network layers directly without any compilers, enabling larger networks at significantly lower power, greatly increasing battery life, privacy and responsiveness, while decreasing infrastructure costs.

With I/O support for a variety of sensor modalities, including vision, audio and motion, our NDPs bring AI to edge applications in all kinds of domains, from earbuds and doorbells to automobiles and manufacturing machinery.

Neural Decision Processor Portfolio

| Status | Primary Modality | Package | Syntiant DNN* Core | MCU | DSP | ||||||

| Memory | Clock | Features | Memory | Clock | Memory | Clock | Features | ||||

| NDP100 | Mass Production | Audio, Sensors |

1.4mm x 1.8mm

12-ball WLBGA

|

params: 589k1 | < 16MHz |

4 Layer

Fully Connected

|

M0, 112kB | < 16MHz | N/A | ||

| NDP101 | Mass Production | Audio, Sensors |

5mm x 5mm

32-pin QFN

|

params: 589k1 | < 16MHz |

4 Layer

Fully Connected

|

M0, 112kB | < 16MHz | N/A | ||

| NDP102 | Mass Production | Audio, Sensors |

1.7mm x 2.5mm

20-ball eWLB

|

params: 589k1 | < 16MHz |

4 Layer

Fully Connected

|

M0, 112kB | < 16MHz | N/A | ||

| NDP115 | Mass Production | Audio, Sensors |

2mm x 2mm

25-ball WLBGA

|

pmem: 448kB

dmem: 64kB

|

Same as DSP |

All Networks,

Fixed Point

|

M0, 48kB | Same as DSP |

imem: 64kB

dmem: 144kB

|

0.9V: < 22MHz

1.0V: < 60MHz

1.1V: < 100MHz

|

HiFi3:

Fixed-point

|

| NDP120 | Mass Production | Audio, Sensors | 3.1 mm x 2.5 mm 42-ball WLBGA & 5mm x 5mm 40-pin QFN |

pmem: 896kB

dmem: 128kB

|

Same as DSP |

All Networks,

Fixed Point

|

M0, 48kB | Same as DSP |

imem: 96kB

dmem: 192kB

|

0.9V: < 22MHz

1.0V: < 60MHz

1.1V: < 100MHz

|

HiFi3:

Fixed-point

|

| NDP200 | Mass Production | Computer Vision |

5mm x 5mm

40-pin QFN

|

pmem: 896kB

dmem: 128kB

|

Same as DSP |

All Networks,

Fixed Point

|

M0, 48kB | Same as DSP |

imem: 96kB

dmem: 192kB

|

0.9V: < 22MHz

1.0V: < 60MHz

1.1V: < 100MHz

|

HiFi3:

Fixed-point

|

| NDP250 | Sampling | Computer Vision, Sensor, Speech |

6.1mm x 5.1mm

120-ball eWLB

|

6MB

|

Same as DSP |

All Networks,

Fixed Point

|

0.5MB | Same as DSP |

1.5MB

|

0.8V: < 80MHz

0.9V: < 120MHz

|

HiFi3:

Floating-point

|

AISonic™ Audio Edge Processors

Our AISonic™ Audio Edge Processors are optimized for machine learning, allowing for high compute and low power operation for voice activation, hands-free control and contextual audio processing in a range of mobile devices, TWS headsets, portable speakers, TVs and other IoT devices.

Syntiant Powered Platforms

-

Syntiant Evaluation Kits

Syntiant evaluation kits are designed to help customers bring up the solution quickly and access features easily. The evaluation kit consists of a shield, featuring our Neural Decision Processor, connected to a Raspberry Pi Model 3B+ single-board.

-

RASynBoard

Developed in collaboration with Avnet and Renesas, the RASynBoard is a small, ultra-low power, edge AI/ML board, featuring a Syntiant NDP120, a Renesas RA6M4 host MCU plus a power-efficient DA16600 Wi-Fi/BT combo module.

-

Nicla Voice

Built in collaboration with Arduino and powered by the Syntiant NDP120, the Nicla Voice platform allows for easy implementation of always-on speech recognition at the edge, enabling multiple concurrent AI models.

-

TinyML Development Platform

Syntiant’s Tiny Machine Learning (“TinyML”) Development Platform, featuring the NDP101 is the ideal board for building low-power voice, acoustic event detection (AED) and sensor ML applications. Developed in collaboration with Edge Impulse, our developer kit provides broad access to Syntiant’s technology.

-

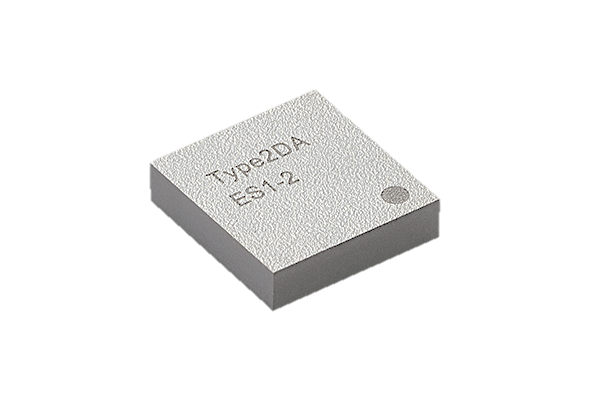

Murata Type2DA AI Module

Murata’s Type2DA AI Module is a compact (6.1mm X 5.8mm) multi-chip module containing a Syntiant NDP102, an MCU, a crystal oscillator, an LDO and flash memory. This makes the Type2DA ideal for TinyML audio and sensor applications.

-

Eoxys ML SOMs

Eoxys’ XENO+ ML (Machine-Learning) SOM (System-On-Module) with our NDP120 with Syntiant’s second-generation Core 2 neural network engine bring highly accurate, low-power inference to edge devices for both consumer and industrial segments. The XENO+ ML SOMs bring together AI and Machine-Learning capabilities with Wi-Fi/ BLE/ LTE wireless connectivity.